A Guide to Understanding Scientific Studies (part I)

“There is increasing concern that most current published research findings are false.”

John P. A. Ioannidis, Why Most Published Research Findings Are False

The Problem of Conflicting Studies

When I launched my software company back in 2005, pair programming was considered to be the gold standard in writing code.

Advocates referred to studies like The Costs and Benefits of Pair Programming by Alistair Cockburn and Laurie Williams, claiming it reduced defects and long-term costs, despite the higher investment.

Yet when we tried pair programming in our own company, we got mixed results. Our experiences were confirmed by the 2009 study The effectiveness of pair programming by Jo E. Hannay, Tore Dybå and others, which suggested that pair programming is not always beneficial.

This experience raises an important question. As product managers, we often rely on scientific research to make decisions that affect our products, people, and bottom line. So, when studies present problematic results, as in the case of pair programming, how do we decide which ones to trust?

When Science Falls Short

The problem with questionable scientific outcomes is not new, and it’s not just a problem in software development. In fact, it’s been described as a crisis across all fields of science. It has led to the rise of a new field of study: meta-science, which studies the quality of scientific research itself.

A fundamental principle in meta-science is that for a scientific finding to be valid, the experiment that led to it needs to be reproducible. This means that if other researchers conduct the same experiment, they should get the same results.

So, how much of a problem is reproducibility in sciene? Let’s look at some examples.

In 2015, a team of scientists led by Brian Nosek tried to replicate 100 high-profile psychology studies from three high-profile journals. Even with the assistance of the original authors, no more than 36% of the studies turned out to be reproducible.

In 2021, the same team reviewed 193 cancer research experiments from 53 high-profile papers, published between 2010 and 2012. Surprisingly, only 26% of experiments could be successfully replicated, while the observed effects were on average 85% smaller than the published ones. None of the papers had fully shared their experimental protocols, and 70% of the experiments couldn’t be replicated without assistance from the original authors.

This lack of reproducibility isn’t just a theoretical problem.

Bioinformatician Keith Baggerly uncovered serious errors in the data of what was celebrated as groundbreaking cancer research by Duke University, published in Nature Medicine. By the time his findings were finally accepted, years later, cancer patients were already receiving treatment based on this flawed research. (This captivating video features Baggerly discussing the case.)

So, the crisis is real and it’s here. When science gets it wrong, the consequences can be dire.

What Drives bad Science?

There are numerous reasons for bad science. Let’s explore the most common ones:

Career Pressure

In academia, researchers are under constant pressure to “publish or perish”. This can lead to rushed experiments and shortcuts.

We operate within a reward system that deemphasizes curiosity, rigor, transparency, and sharing as attributes of successful scientists. By rewarding scientists based on the outcomes […] rather than the quality and rigor of their research, we create perverse incentives that favor publishability at the expense of accuracy.

Brian Nosek (Center for Open Science), The Values, and Practice, of Science

Confirmation Bias

Confirmation bias happens when a scientist -consciously or not- designs or interprets a study to confirm their existing ideas.

In 1996, renowned psychologist John Bargh stated that if participants were primed with words related to old age, they would walk slower upon leaving the laboratory. His study was widely acclaimed and bolstered the field of priming.

In 2012 however, a team of researchers led by David Shanks found that Barth’s results were not reproducible, casting doubt on the entire concept of priming. Critics suggested that Bargh had designed his study to confirm, rather than explore or challenge, his hypothesis.

Funding Bias

Funding is the lifeblood of research, but comes with strings attached.

Academic researchers depend on funding committees to invest in their proposals. Committee members however have their own preferences, biases, and interests, and often, they fund projects supporting the prevailing consensus, rather than ones that challenge them.

Pharmaceutical companies fund studies with the unspoken expectation that the outcomes favor their products and bottom line.

Similarly, government or non-profit organizations might favor projects that align with their policies, leaving less popular research areas underfunded.

Publication Bias

Publishers of scientific journals know that positive, novel findings “sell” better than negative and inconclusive outcomes.

In 2008, a study led by Dr. Erick H. Turner et al., published in the New England Journal of Medicine, revealed strong bias toward the publication of positive results in clinical trials of antidepressants.

They found that most studies with positive outcomes (37 out of 38) found their way to publication. On the other hand, for those with negative or questionable outcomes, 22 out of 36 were either not published or were published only after being spun to appear positive.

Publication bias leads to the “file-drawer problem”: as most studies never get published, they are not available to balance out the ones that do. This increases the odds of scientific consensus being based on random, “interesting” outcomes that aren’t necessarily true.

Small Sample Sizes

Studies often lack funding, and collect too little data as a result. The resulting small sample sizes increase the odds of random findings — either a false positive (finding something that isn’t there) or a false negative (missing something that is there.)

Combined with the “file-drawer problem” and publication bias, this leads to the publication of “outcomes” that are merely statistical flukes rather than actual evidence.

Authority Bias

You might think that bias in a single study is not a concern — after all, reading multiple studies should provide a balanced view, right? However, that doesn’t work when authority figures direct all research into the same direction, creating a false consensus.

A classic example is the diet-heart hypothesis. Proposed by physiologist Ancel Keys in the 1950s, it suggests that saturated fat (commonly found in natural plant- and animal-based foods) causes cardiovascular disease by raising cholesterol levels in the blood.

Such was the authority of Keys that his hypothesis, that was contradicted by even his own data, remained unchallenged for decades. To this day, even after Keys’ passing, nutrition committees worldwide recommend the general public to restrict saturated fat intake, with potentially dire consequences.

How Results get Skewed

Ever wondered how researchers might tilt their results? Here are some common tricks:

Hypothesis Switching

Hypothesis switching, or HARKing (Hypothesizing After the Results are Known), is when researchers change their study design based on the results they see during the study.

Imagine a team studying a drug for a certain disease, but they notice it improves sleep quality. They then switch their focus to sleep instead of the original disease.

While it may seem harmless, this practice is misleading: it makes the outcome appear more relevant than it truly is. In proper science, you’re supposed to set your targets before you start and stick to them, exploring unexpected findings in new, separate studies.

Cherry-Picking Data

Cherry-picking data is when researchers selectively report or emphasize certain findings that support their hypotheses, while ignoring or downplaying the rest.

Suppose a researcher is studying the impact of a diet on weight loss and collects weekly weight data for a year. They might choose to report only the weeks when participants lost weight, ignoring those where they didn’t. This would give the impression that the diet is more effective than it really is.

This practice gives a skewed perspective on the data, leading to misinterpretation and biased conclusions. A complete view of all the data, not just the ‘convenient’ parts, is crucial for an accurate understanding of the data.

P-Hacking

P-Hacking, also known as data-dredging, is running many statistical tests on a dataset and only reporting the ones that show a “significant” result. In statistics, when the p-value (probability value) is less than 0.05, results are often considered significant, suggesting a less than 5% chance that the observed result occurred by random chance alone.

However, if you test many different hypotheses, by chance alone, you’re likely to find some “significant” results. For example, if you run 20 tests, statistically, you’d expect one test to give a “significant” result purely by chance because 5% of 20 is 1.

If you only report that one “significant” result and ignore the other 19, that’s p-hacking. It yields false-positive results, in other words you claim that something is true when it really isn’t.

Selective Use of Statistical Methods

Also known as “method shopping”, this is cherry-picking a statistical method that gives the most “exciting” result while not being the most appropriate for the data or question at hand.

It’s another way to create an illusion of significant results when they might not actually be there. In the spirit of open science, researchers should decide on and declare their statistical methods upfront to avoid this kind of bias.

Data Fabrication

Data fabrication is when a researcher makes up or alters data to create the desired result. Say, for instance, you’re testing a new drug, but it doesn’t work better than a placebo. If you then invent fake patients who got better with the drug or erase data from those who had no improvement, you’re fabricating data.

This isn’t just unethical - it’s dangerous. It can lead to ineffective treatments getting approved, other researchers wasting time on false leads. Ultimately, it contributes to a loss of public trust in science.

The Importance of Transparency

Can we still trust science, you might ask, considering everything that’s going wrong?

Currently, the credibility of a study depends on the reputation of its authors, the research institute, or the journal. But as the Duke University example shows, we can’t always rely on those. So, what can we rely on? The answer lies in open science principles.

With open science principles, researchers share their objectives, study designs, data, and methods publicly as they become available. This makes their research reproducible, and since no scientist would want their competence or integrity questioned, we can have more confidence in their results from the outset.

The beauty of open science is that motives for bias no longer matter. We don’t need to be concerned about groupthink, financial incentives or publication bias, because whatever the motive, any form of manipulation will show.

This transparency works both ways. If a company or government wants to fund research while taking away any suspicion of undue influence, they can prove their commitment with open science principles. The data is doing the talking.

Despite these benefits, many researchers and scientific institutions withhold their data or statistical methods.

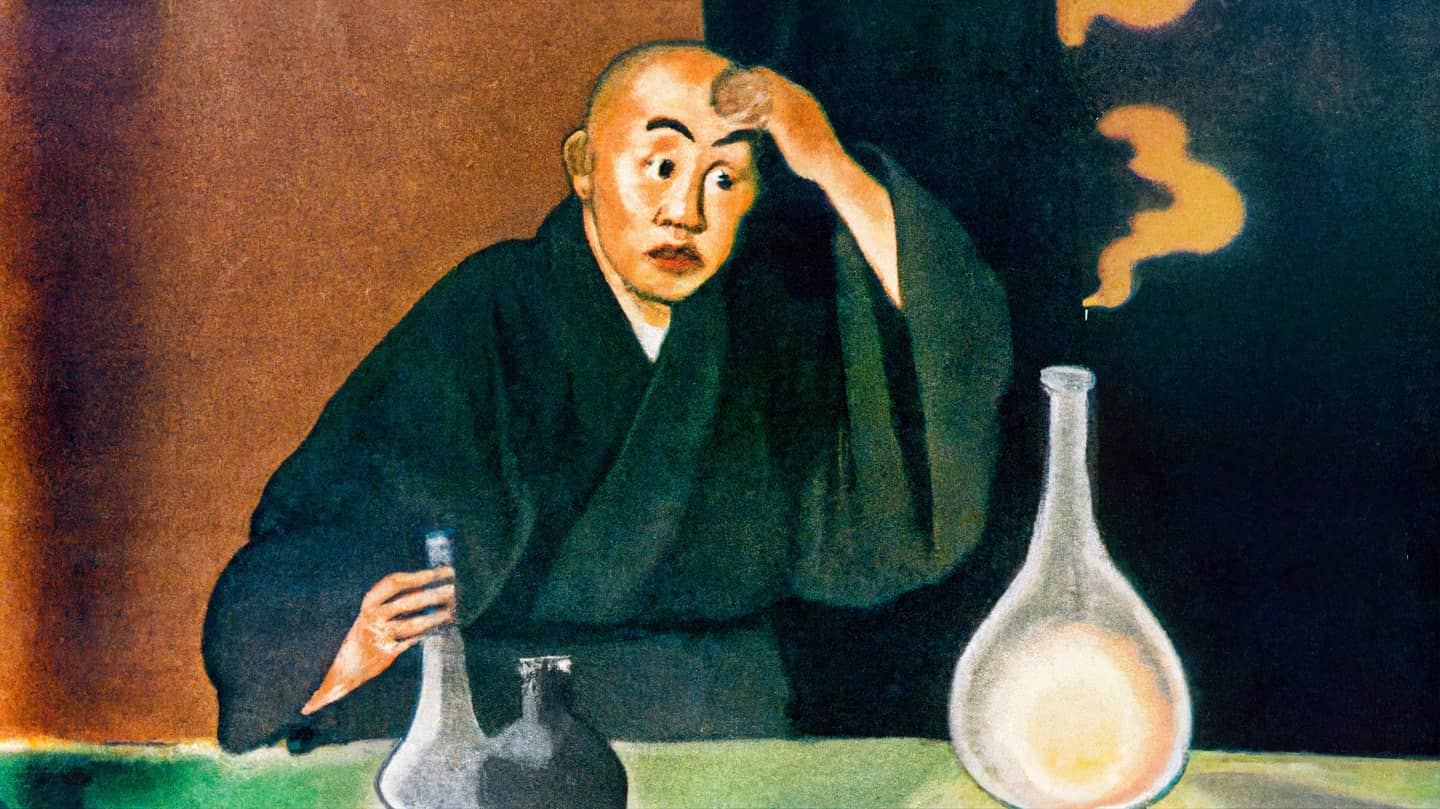

How much of a problem is that? Let’s listen to what Tsuyoshi Miyakawa, Editor-in-Chief of Molecular Brain, has to say:

"[…] I have handled 180 manuscripts since early 2017 and have made 41 editorial decisions categorized as “Revise before review,” requesting that the authors provide raw data.

Surprisingly, among those 41 manuscripts, 21 were withdrawn without providing raw data, indicating that requiring raw data drove away more than half of the manuscripts.

I rejected 19 out of the remaining 20 manuscripts because of insufficient raw data. Thus, more than 97% of the 41 manuscripts did not present the raw data supporting their results when requested by an editor, suggesting a possibility that the raw data did not exist from the beginning, at least in some portions of these cases."

Tsuyoshi Miyakawa, No raw data, no science: another possible source of the reproducibility crisis, Molecular Brain 2020/13

We can only hope that this example, where 97% of draft publications were submitted without data, is not representative for all of science. If scientists are unwilling or unable to share their data, we can’t have confidence in their work.

Concretely, a study should provide links to:

- An independent source where the research goals and study design were registered upfront, proving that these were not changed during the research.

- Full raw data, statistical methods, and algorithms, enabling anyone to replicate the results.

Unfortunately, most studies do not meet these criteria, leaving us with no choice but to read them with a fair amount of skepticism.

Next Part

In part II of this article, we look into the different types of research that exist, and what evidence they can provide to us.